ADVERTISEMENT

Doctors Reveal That Swallowing Leads to… See More

Story Of The Day!

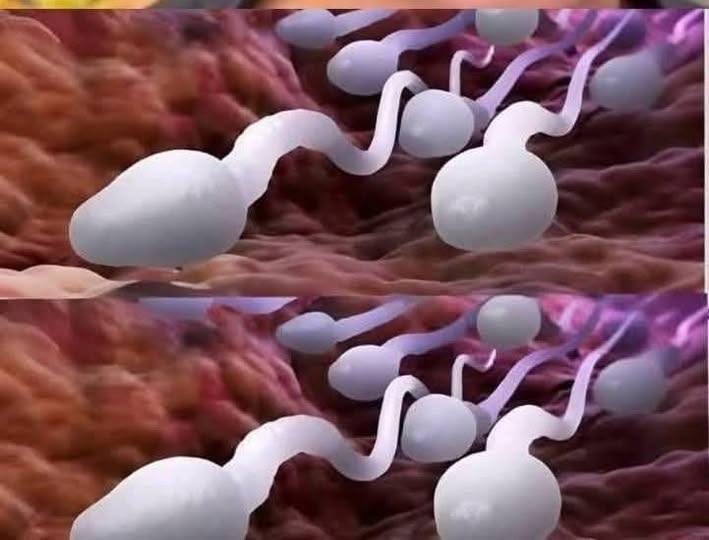

From gut health to emotional wellbeing, doctors say swallowing isn’t just the final step of eating—it’s a crucial part of how your body communicates, regulates, and even heals itself.

ADVERTISEMENT